ALTENDEITERING, Marcel; GUGGENBERGER, Tobias Moritz. Designing Data Quality Tools: Findings from an Action Design Research Project at Boehringer Ingelheim. In: European Conference on Information Systems (ECIS). 2021.

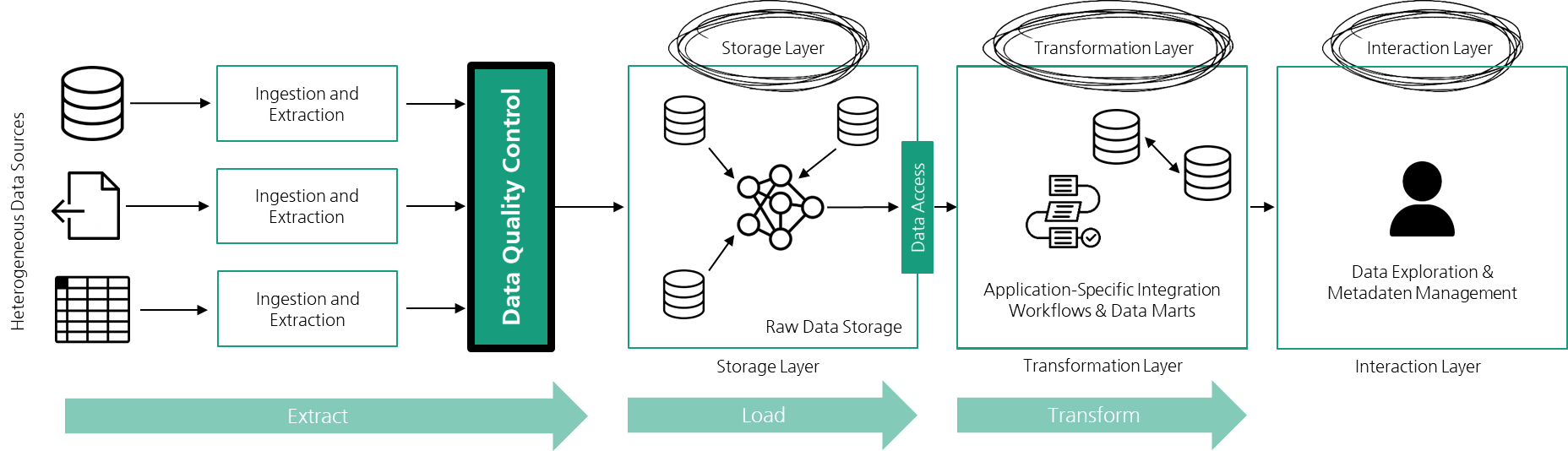

TEBERNUM, Daniel; ALTENDEITERING, Marcel; HOWAR, Falk. DERM: A Reference Model for Data Engineering. In: International Conference on Data Science, Technology and Applications (DATA). 2021.

ALTENDEITERING, Marcel; DÜBLER, Stephan. Scalable Detection of Concept Drift: A Learning Technique Based on Support Vector Machines. Procedia Manufacturing, 2020, 51. Jg., S. 400-407.

AMADORI, Antonello; ALTENDEITERING, Marcel; OTTO, Boris. Challenges of Data Management in Industry 4.0: A Single Case Study of the Material Retrieval Process. In: International Conference on Business Information Systems. Springer, Cham, 2020. S. 379-390.

HENZE, Jasmin; HOUTA, Salima; SURGES, Rainer; KREUZER, Johannes; BISGNI, Pinar. Multimodal Detection of Tonic-Clonic Seizures Based on 3D Acceleration and Heart Rate Data from an In-Ear-Sensor. In: Del Bimbo A. et al. (eds) Pattern Recognition. ICPR International Workshops and Achallenges. ICPR 2021. Lecture Notes in Computer Science, vol 12661. Springer, Cham. 2021. ISBN: 978-3-030-68762-5

BISGIN, Pinar; BURMANN Anja, LENFERS, Tim. REM Sleep Stage Detection of Parkinson’s Disease Patients with RBD. In: International Conference on Business Information Systems. Springer, Cham, 2020. S. 35-45. ISBN: 978-3-030-53337-3

MEISTER, Sven; HOUTA, Salima; BISGIN, Pinar. Mobile Health und digitale Biomarker: Daten als „neues Blut “für die P4-Medizin bei Parkinson und Epilepsie. In: mHealth-Anwendungen für chronisch Kranke. Springer Gabler, Wiesbaden, 2020. S. 213-233. ISBN: 978-3-658-29133-4

HOUTA, Salima; BISGIN, Pinar; DULICH, Pascal. Machine Learning Methods for Detection of Epileptic Seizures with Long-Term Wearable Devices. In: Elev Int Conf EHealth, Telemedicine, Soc Med. 2019. S. 108-13. ISBN: 978-1-61208-688-0

BISGIN, P.; MEISTER, S.; HAUBRICH, C. Erkennen von parkinsonassoziierten Mustern im Schlaf und Neurovegetativum, 64. Jahrestagung der Deutschen Gesellschaft für Medizinische Informatik, Biometrie und Epidemiologie e. V. (GMDS), Dortmund, 2019. Abstract 44.

ALTENDEITERING, Marcel; TOMCZYK, Martin. A Functional Taxonomy of Data Quality Tools: Insights from Science and Practice. 2022. Wirtschaftsinformatik 2022 Proceedings.

ALTENDEITERING, Marcel. Mining data quality rules for data migrations: a case study on material master data. In: International Symposium on Leveraging Applications of Formal Methods. Springer, Cham, 2021. S. 178-191.

SALVI, Rutuja, et al. Vascular Auscultation of Carotid Artery: Towards Biometric Identification and Verification of Individuals. Sensors, 2021, 21. Jg., Nr. 19, S. 6656.

FIEGE, Eric, et al. Automatic Seizure Detection Using the Pulse Transit Time. arXiv preprint arXiv:2107.05894, 2021.

Fraunhofer Institute for Software and Systems Engineering

Fraunhofer Institute for Software and Systems Engineering